The shortest and simplest explanation is that I left my academic job at OU to prioritize my personal life, and this is how I described it at first. I was in a long-distance relationship for the entirety of my time at OU, and my partner and I were ready to share a home base. But that explanation is too brief— it took years for me to reach this decision, and I didn’t make it lightly. Inspired by some friends who have recently shared more details about their departures, I decided to share a longer version of my story.

I want to acknowledge up top that I had a decent savings by the time I left (Thank you, Oklahoma!), another financial safety net for the job transition (my partner), and no family to take care of or other big responsibilities (Cookie is sooo tiny!). All of this released a lot of worry and uncertainty around my decision. I’m grateful for that, and I wish that everybody could choose what’s right for them without having to worry about how to make ends meet.

Settling into the field in graduate school

To be honest, there were indicators during my academic career that I wasn’t all-in. When I decided to attend UCSC, I specifically opted for on-campus housing so that if I decided to leave the program, there would be no penalties for breaking my lease. In my first year, I felt insecure about my level of dedication. I can’t really access what I was feeling at the time (it was, of course, FIFTEEN DANG YEARS DANG AGO), but it was something to the effect of “My life does not revolve around this.” I liked linguistics, but I liked other things, too! A new friend, who was in the MA program at UCSC and was planning to leave the field, used the phrase “I want to be a body, not a brain!” to describe their feelings, and I thought, “Me too! I’m also a body in this world!” I brought this up at a social event among grad students in a “Don’t we all feel this way?” kind of way, and I was surprised by the response. Apparently, we did not all feel this way. But I stayed in the program (I like linguistics!) and continued to try my hardest to do well.

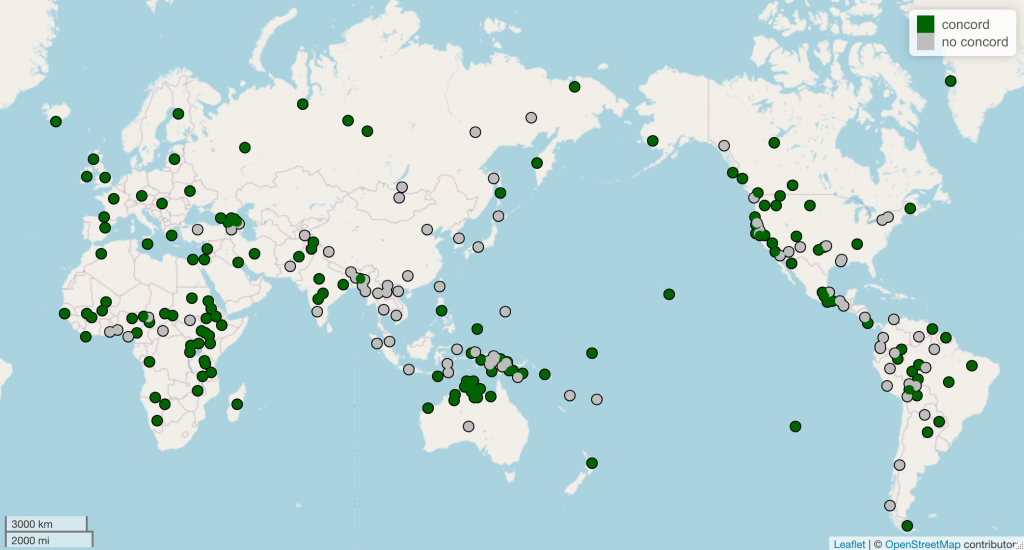

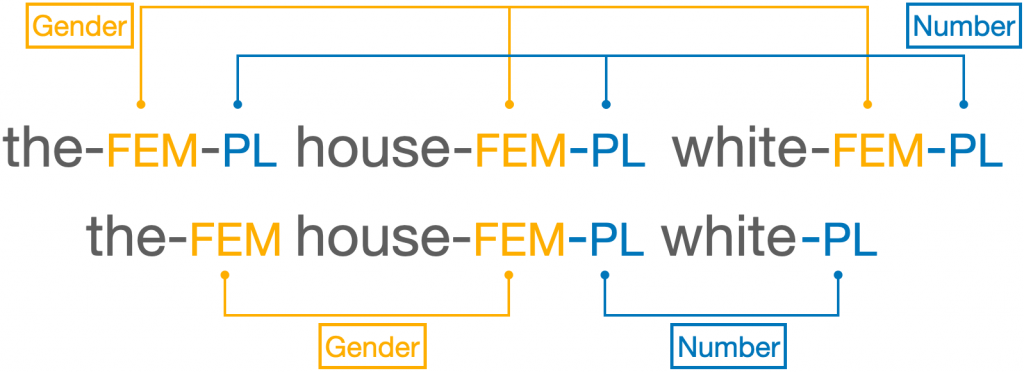

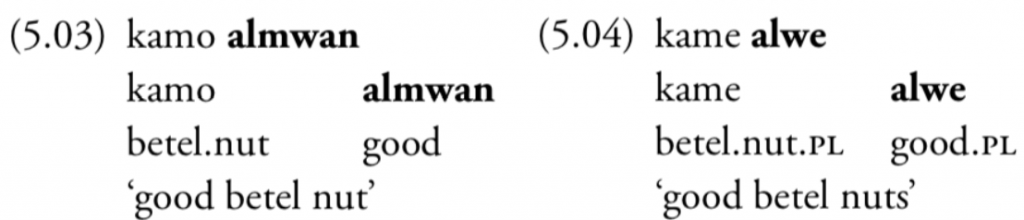

By the time I started to work on concord (in my third year), I had really settled into things. It was exciting to feel like I was breaking new ground, and even though I think I probably cried about once a week (lol), I recall enjoying my life and enjoying my work. When it came time to apply for jobs, it did not even cross my mind to go into industry, even though there were many recent graduates from my program who had found gainful employment in the tech industry. I was certain that I had to try to be a professor even as I was uncertain if it was what I wanted. In fact, I didn’t really consider asking my partner to move with me to Oklahoma, and one of the reasons was that I wasn’t sure if I wanted to be a professor for the rest of my life.

Add “decide your whole life” to my pre-tenure expectations

When I was getting ready to head to OU, my partner’s career was also starting to go in new directions, and we mutually decided to let each other follow these career paths without the pressure of making a decision. I spoke with faculty at OU and at other departments who would console me by saying things like, “Oh, such-and-such faculty member lives far away from their partner, and they make it work!” and I thought, “But this is not something you HAVE to deal with in life! And it is not what I want!” I knew, at least, that sacrificing sharing a home base with my partner was not something I was willing to do forever.

Maybe because of that, I put a lot of pressure on myself to figure out if being a professor was what I wanted in those early years. Asking my partner to give up his career to move to Oklahoma (or somewhere else, if I got a job elsewhere) was a big deal to me, and I wanted to be sure. But of course, I had never been sure (you know, like sure-sure). And furthermore, my early years at OU were difficult because I was having trouble getting work through prepublication peer review (which is a bad practice). It felt impossible to decide if this was truly what I wanted when I was also struggling to feel successful.

Over winter break in my second year, I had a panic attack while visiting my partner, precipitated by stress/anxiety about everything I needed to do for work. This was an important signal, as I have had maybe three panic attacks my entire life. I decided I should start seeing a therapist again.

One of the early revelations that came out of therapy was that I was (i) not fully committed to my position at OU (because I was “trying to figure out” if it was what I wanted) but (ii) acting in my role as though I was fully committed in terms of effort expended and expectations I had for myself. This made it very difficult to feel calm and secure, so I decided that I had to commit to my faculty position and my life in Norman as long as I was there. I bought a house at the end of my second year (a house I loved dearly; Thank you, Oklahoma!), and I released myself from the pressure of needing to figure it out. The next several semesters were generally pretty fun, I had some more success, and I liked my job.

“Wondering whether” can be an answer

In roughly the middle of my fourth year, I was still wondering if it was what I wanted. I realized that if I was still wondering after 3.5 years, that was enough of an answer. I also did the math and realized that I had 30+ working years left (Thank you, American capitalism!), which is plenty of time to build a second career. I liked my job enough, but I didn’t feel like it was my calling or passion, and I knew what I was sacrificing in order to pursue it. I began to think that it was worth it for me to try to find something else that either (i) I liked even more or (ii) did not ask me (or my loved ones!) to sacrifice so much.

After a particularly stimulating academic conference, I reconsidered the decision for the final time: Did I want to leave Academia entirely, or did I just need to leave my job at OU for a “better” academic job? Another friend who had left for industry reminded me in a helpful phone conversation that the academic job that I could be happy with over the long term was extremely difficult to get (if it even existed). For years, I had held on to the promise of an academic job where (i) I was treated well by colleagues and the institution (and that includes pay and research funding), (ii) I could research and teach what I wanted, (iii) I had dedicated and funded graduate students, and (iv) I lived in a place I wanted to live. But how many of those jobs are there? So many faculty members sacrifice some number of these things in order to be faculty. I had been at an institution where I was sacrificing to some degree on all four of those qualities, and though there were things about my work that I liked, I knew that they were not enough to endure those sacrifices indefinitely.

It would take time to find a new way to make money, but I had over 30 years to build a new career. I resolved to go for it.

What do I think about it now?

While my relationship wasn’t the reason I left academia, it was the catalyst for my decision. It forced me to wrestle with issues much quicker than I might have otherwise, but I ultimately arrived at the right decision for me. Even if another academic job existed in San Francisco, where my partner and I planned to live together, I knew that I wouldn’t apply for it. (And I still wouldn’t.)

Folks sometimes ask if I miss it, and the answer is yes and no (but mostly no)! I say this every time I talk about it, but I’m so glad that I spent 11 years of my working life getting paid to study generative linguistic theory and teach linguistics. Human language is one of the great loves of my life, and I find it incredibly fascinating and fun. I do not regret my decision to take the job at OU, I don’t regret my decision to stay for five years, and I don’t regret my decision to leave. I have said before that I don’t miss the amount of research I used to do, but I also don’t really miss teaching. That doesn’t mean I didn’t like it! It just means that there are so many things in life to be enjoyed, I found other things to enjoy, and it was enough to fill my cup. I treasure the good relationships with students and faculty I made while I was at OU, but I definitely do not miss grading papers. So it’s “yes!” because I remember it with fondness, but “No!” because I don’t regret leaving, and I don’t yearn for the work.

I was so worried about what the rest of my life would look like if it didn’t revolve around linguistics, teaching, and the broader linguistics and higher education community, but it’s okay. I still have friends from that time, and I still engage with linguistics research from time to time. But now, it’s entirely on my terms.

(This is the third post in a series of blog posts about my transition from academia to industry and my feelings about my time as an academic teacher and researcher. To read more, see the “industry transition” tag.)